GraphQL Transform

The GraphQL Transform provides a simple to use abstraction that helps you quickly create backends for your web and mobile applications on AWS. With the GraphQL Transform, you define your application’s data model using the GraphQL Schema Definition Language (SDL) and the library handles converting your SDL definition into a set of fully descriptive AWS CloudFormation templates that implement your data model.

For example you might create the backend for a blog like this:

type Blog @model {

id: ID!

name: String!

posts: [Post] @connection(name: "BlogPosts")

}

type Post @model {

id: ID!

title: String!

blog: Blog @connection(name: "BlogPosts")

comments: [Comment] @connection(name: "PostComments")

}

type Comment @model {

id: ID!

content: String

post: Post @connection(name: "PostComments")

}

When used along with tools like the Amplify CLI, the GraphQL Transform simplifies the process of

developing, deploying, and maintaining GraphQL APIs. With it, you define your API using the

GraphQL Schema Definition Language (SDL) and can then use automation to transform it into a fully

descriptive cloudformation template that implements the spec. The transform also provides a framework

through which you can define your own transformers as @directives for custom workflows.

Quick Start

Navigate into the root of a JavaScript, iOS, or Android project and run:

amplify init

Follow the wizard to create a new app. After finishing the wizard run:

amplify add api

# Select the graphql option and when asked if you

# have a schema, say No.

# Select one of the default samples. You can change it later.

# Choose to edit the schema and it will open your schema.graphql in your editor.

You can leave the sample as is or try this schema.

type Blog @model {

id: ID!

name: String!

posts: [Post] @connection(name: "BlogPosts")

}

type Post @model {

id: ID!

title: String!

blog: Blog @connection(name: "BlogPosts")

comments: [Comment] @connection(name: "PostComments")

}

type Comment @model {

id: ID!

content: String

post: Post @connection(name: "PostComments")

}

Once you are happy with your schema, save the file and click enter in your terminal window. if no error messages are thrown this means the transformation was successful and you can deploy your new API.

amplify push

Go to AWS CloudFormation to view it. You can also find your project assets in the amplify/backend folder under your API.

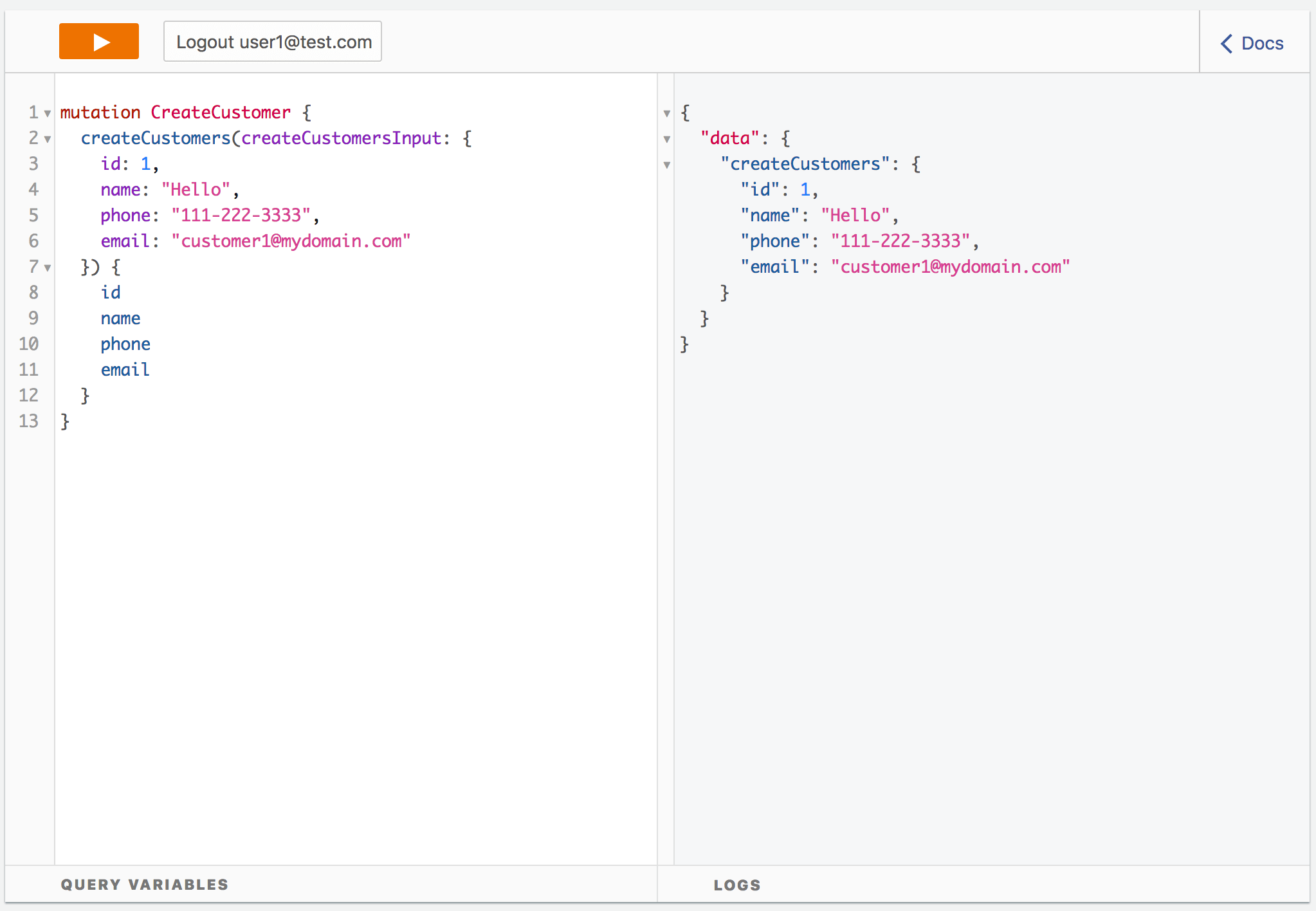

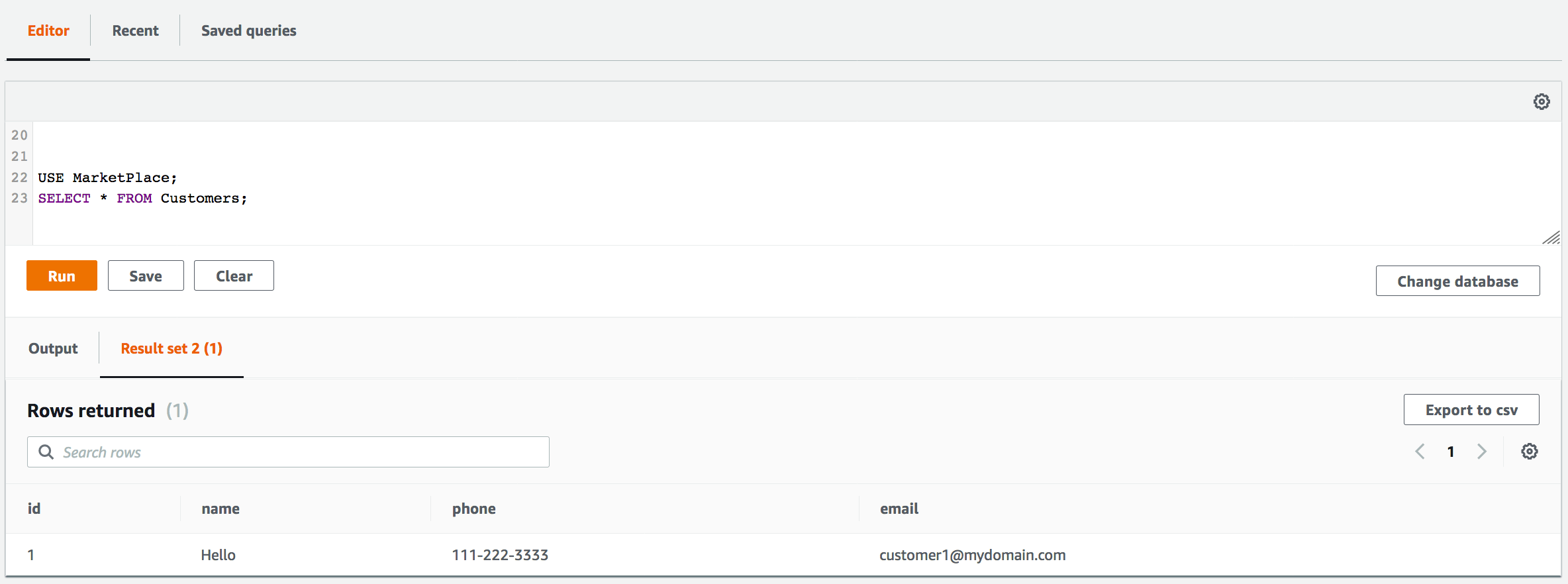

Once the API is finished deploying, try going to the AWS AppSync console and running some of these queries in your new API’s query page.

# Create a blog. Remember the returned id.

# Provide the returned id as the "blogId" variable.

mutation CreateBlog {

createBlog(input: {

name: "My New Blog!"

}) {

id

name

}

}

# Create a post and associate it with the blog via the "postBlogId" input field.

# Provide the returned id as the "postId" variable.

mutation CreatePost($blogId:ID!) {

createPost(input:{title:"My Post!", postBlogId: $blogId}) {

id

title

blog {

id

name

}

}

}

# Provide the returned id from the CreateBlog mutation as the "blogId" variable

# in the "variables" pane (bottom left pane) of the query editor:

{

"blogId": "returned-id-goes-here"

}

# Create a comment and associate it with the post via the "commentPostId" input field.

mutation CreateComment($postId:ID!) {

createComment(input:{content:"A comment!", commentPostId:$postId}) {

id

content

post {

id

title

blog {

id

name

}

}

}

}

# Provide the returned id from the CreatePost mutation as the "postId" variable

# in the "variables" pane (bottom left pane) of the query editor:

{

"postId": "returned-id-goes-here"

}

# Get a blog, its posts, and its posts' comments.

query GetBlog($blogId:ID!) {

getBlog(id:$blogId) {

id

name

posts(filter: {

title: {

eq: "My Post!"

}

}) {

items {

id

title

comments {

items {

id

content

}

}

}

}

}

}

# List all blogs, their posts, and their posts' comments.

query ListBlogs {

listBlogs { # Try adding: listBlog(filter: { name: { eq: "My New Blog!" } })

items {

id

name

posts { # or try adding: posts(filter: { title: { eq: "My Post!" } })

items {

id

title

comments { # and so on ...

items {

id

content

}

}

}

}

}

}

}

If you want to update your API, open your project’s backend/api/~apiname~/schema.graphql file (NOT the one in the backend/api/~apiname~/build folder) and edit it in your favorite code editor. You can compile the backend/api/~apiname~/schema.graphql by running:

amplify api gql-compile

and view the compiled schema output in backend/api/~apiname~/build/schema.graphql.

You can then push updated changes with:

amplify push

Directives

@model

Object types that are annotated with @model are top-level entities in the

generated API. Objects annotated with @model are stored in Amazon DynamoDB and are

capable of being protected via @auth, related to other objects via @connection,

and streamed into Amazon Elasticsearch via @searchable. You may also apply the

@versioned directive to instantly add a version field and conflict detection to a

model type.

Definition

The following SDL defines the @model directive that allows you to easily define

top level object types in your API that are backed by Amazon DynamoDB.

directive @model(

queries: ModelQueryMap,

mutations: ModelMutationMap,

subscriptions: ModelSubscriptionMap

) on OBJECT

input ModelMutationMap { create: String, update: String, delete: String }

input ModelQueryMap { get: String, list: String }

input ModelSubscriptionMap {

onCreate: [String]

onUpdate: [String]

onDelete: [String]

level: ModelSubscriptionLevel

}

enum ModelSubscriptionLevel { off public on }

Usage

Define a GraphQL object type and annotate it with the @model directive to store

objects of that type in DynamoDB and automatically configure CRUDL queries and

mutations.

type Post @model {

id: ID! # id: ID! is a required attribute.

title: String!

tags: [String!]!

}

You may also override the names of any generated queries, mutations and subscriptions, or remove operations entirely.

type Post @model(queries: { get: "post" }, mutations: null, subscriptions: null) {

id: ID!

title: String!

tags: [String!]!

}

This would create and configure a single query field post(id: ID!): Post and

no mutation fields.

Generates

A single @model directive configures the following AWS resources:

- An Amazon DynamoDB table with PAY_PER_REQUEST billing mode enabled by default.

- An AWS AppSync DataSource configured to access the table above.

- An AWS IAM role attached to the DataSource that allows AWS AppSync to call the above table on your behalf.

- Up to 8 resolvers (create, update, delete, get, list, onCreate, onUpdate, onDelete) but this is configurable via the

queries,mutations, andsubscriptionsarguments on the@modeldirective. - Input objects for create, update, and delete mutations.

- Filter input objects that allow you to filter objects in list queries and connection fields.

This input schema document

type Post @model {

id: ID!

title: String

metadata: MetaData

}

type MetaData {

category: Category

}

enum Category { comedy news }

would generate the following schema parts

type Post {

id: ID!

title: String!

metadata: MetaData

}

type MetaData {

category: Category

}

enum Category {

comedy

news

}

input MetaDataInput {

category: Category

}

enum ModelSortDirection {

ASC

DESC

}

type ModelPostConnection {

items: [Post]

nextToken: String

}

input ModelStringFilterInput {

ne: String

eq: String

le: String

lt: String

ge: String

gt: String

contains: String

notContains: String

between: [String]

beginsWith: String

}

input ModelIDFilterInput {

ne: ID

eq: ID

le: ID

lt: ID

ge: ID

gt: ID

contains: ID

notContains: ID

between: [ID]

beginsWith: ID

}

input ModelIntFilterInput {

ne: Int

eq: Int

le: Int

lt: Int

ge: Int

gt: Int

contains: Int

notContains: Int

between: [Int]

}

input ModelFloatFilterInput {

ne: Float

eq: Float

le: Float

lt: Float

ge: Float

gt: Float

contains: Float

notContains: Float

between: [Float]

}

input ModelBooleanFilterInput {

ne: Boolean

eq: Boolean

}

input ModelPostFilterInput {

id: ModelIDFilterInput

title: ModelStringFilterInput

and: [ModelPostFilterInput]

or: [ModelPostFilterInput]

not: ModelPostFilterInput

}

type Query {

getPost(id: ID!): Post

listPosts(filter: ModelPostFilterInput, limit: Int, nextToken: String): ModelPostConnection

}

input CreatePostInput {

title: String!

metadata: MetaDataInput

}

input UpdatePostInput {

id: ID!

title: String

metadata: MetaDataInput

}

input DeletePostInput {

id: ID

}

type Mutation {

createPost(input: CreatePostInput!): Post

updatePost(input: UpdatePostInput!): Post

deletePost(input: DeletePostInput!): Post

}

type Subscription {

onCreatePost: Post @aws_subscribe(mutations: ["createPost"])

onUpdatePost: Post @aws_subscribe(mutations: ["updatePost"])

onDeletePost: Post @aws_subscribe(mutations: ["deletePost"])

}

@key

The @key directive makes it simple to configure custom index structures for @model types.

Amazon DynamoDB is a key-value and document database that delivers single-digit millisecond performance at any scale but making it work for your access patterns requires a bit of forethought. DynamoDB query operations may use at most two attributes to efficiently query data. The first query argument passed to a query (the hash key) must use strict equality and the second attribute (the sort key) may use gt, ge, lt, le, eq, beginsWith, and between. DynamoDB can effectively implement a wide variety of access patterns that are powerful enough for the majority of applications.

When modeling your data during schema design there are common patterns that you may need to leverage. We provide a fully working schema with 17 patterns related to relational designs.

Definition

directive @key(fields: [String!]!, name: String, queryField: String) on OBJECT

Argument

| Argument | Description |

|---|---|

| fields | A list of fields that should comprise the @key, used in conjunction with an @model type. The first field in the list will always be the HASH key. If two fields are provided the second field will be the SORT key. If more than two fields are provided, a single composite SORT key will be created from a combination of fields[1...n]. All generated GraphQL queries & mutations will be updated to work with custom @key directives. |

| name | When provided, specifies the name of the secondary index. When omitted, specifies that the @key is defining the primary index. You may have at most one primary key per table and therefore you may have at most one @key that does not specify a name per @model type. |

| queryField | When defining a secondary index (by specifying the name argument), this specifies that a new top level query field that queries the secondary index should be generated with the given name. |

How to use @key

When designing data models using the @key directive, the first step should be to write down your application’s expected access patterns. For example, let’s say we were building an e-commerce application

and needed to implement access patterns like:

- Get customers by email.

- Get orders by customer by createdAt.

- Get items by order by status by createdAt.

- Get items by status by createdAt.

Let’s take a look at how you would define custom keys to implement these access patterns in your schema.graphql.

# Get customers by email.

type Customer @model @key(fields: ["email"]) {

email: String!

username: String

}

A @key without a name specifies the key for the DynamoDB table’s primary index. You may only provide 1 @key without a name per @model type. The example above shows the simplest case where we are specifying that the table’s primary index should have a simple key where the hash key is email. This allows us to get unique customers by their email.

query GetCustomerById {

getCustomer(email:"me@email.com") {

email

username

}

}

This is great for simple lookup operations, but what if we need to perform slightly more complex queries?

# Get orders by customer by createdAt.

type Order @model @key(fields: ["customerEmail", "createdAt"]) {

customerEmail: String!

createdAt: String!

orderId: ID!

}

This @key above allows us to efficiently query Order objects by both a customerEmail and the createdAt time stamp. The @key above creates a DynamoDB table where the primary index’s hash key is customerEmail and the sort key is createdAt. This allows us to write queries like this:

query ListOrdersForCustomerIn2019 {

listOrders(customerEmail:"me@email.com", createdAt: { beginsWith: "2019" }) {

items {

orderId

customerEmail

createdAt

}

}

}

The query above shows how we can use compound key structures to implement more powerful query patterns on top of DynamoDB but we are not quite done yet. Given that DynamoDB limits you to query by at most two attributes at a time, the @key directive helps by streamlining the process of creating composite sort keys such that you can support querying by more than two attributes at a time. For example, we can implement “Get items by order, status, and createdAt” as well as “Get items by status and createdAt” for a single @model with this schema.

type Item @model

@key(fields: ["orderId", "status", "createdAt"])

@key(name: "ByStatus", fields: ["status", "createdAt"], queryField: "itemsByStatus") {

orderId: ID!

status: Status!

createdAt: AWSDateTime!

name: String!

}

enum Status {

DELIVERED

IN_TRANSIT

PENDING

UNKNOWN

}

The primary @key with 3 fields performs a bit more magic than the 1 and 2 field variants. The first field orderId will be the HASH key as expected, but the SORT key will be a new composite key named status#createdAt that is made of the status and createdAt fields on the @model. The @key directive creates the table structures and also generates resolvers that inject composite key values for you during queries and mutations.

Using this schema, you can query the primary index to get IN_TRANSIT items created in 2019 for a given order.

# Get items for order by status by createdAt.

query ListInTransitItemsForOrder {

listItems(orderId:"order1", statusCreatedAt: { beginsWith: { status: IN_TRANSIT, createdAt: "2019" }}) {

items {

orderId

status

createdAt

name

}

}

}

The query above exposes the statusCreatedAt argument that allows you to configure DynamoDB key condition expressions without worrying about how the composite key is formed under the hood. Using the same schema, you can get all PENDING items created in 2019 by querying the secondary index “ByStatus” via the Query.itemsByStatus field.

query ItemsByStatus {

itemsByStatus(status: PENDING, createdAt: {beginsWith:"2019"}) {

items {

orderId

status

createdAt

name

}

nextToken

}

}

Evolving APIs with @key

There are a few important things to think about when making changes to APIs using @key. When you need to enable a new access pattern or change an existing access pattern you should follow these steps.

- Create a new index that enables the new or updated access pattern.

- If adding a @key with 3 or more fields, you will need to back-fill the new composite sort key for existing data. With a

@key(fields: ["email", "status", "date"]), you would need to backfill thestatus#datefield with composite key values made up of each object’s status and date fields joined by a#. You do not need to backfill data for @key directives with 1 or 2 fields. - Deploy your additive changes and update any downstream applications to use the new access pattern.

- Once you are certain that you do not need the old index, remove its @key and deploy the API again.

Combining @key with @connection

Secondary indexes created with the @key directive can be used to resolve connections when creating relationships between types. To learn how this works, check out the documentation for @connection.

@auth

Authorization is required for applications to interact with your GraphQL API. API Keys are best used for public APIs (or parts of your schema which you wish to be public) or prototyping, and you must specify the expiration time before deploying. IAM authorization uses Signature Version 4 to make request with policies attached to Roles. OIDC tokens provided by Amazon Cognito User Pools or 3rd party OpenID Connect providers can also be used for authorization, and simply enabling this provides a simple access control requiring users to authenticate to be granted top level access to API actions. You can set finer grained access controls using @auth on your schema which leverages authorization metadata provided as part of these tokens or set on the database items themselves.

@auth object types that are annotated with @auth are protected by a set of authorization rules giving you additional controls than the top level authorization on an API. You may use the @auth directive on object type definitions and field definitions in your project’s schema.

When using the @auth directive on object type definitions that are also annotated with

@model, all resolvers that return objects of that type will be protected. When using the

@auth directive on a field definition, a resolver will be added to the field that authorize access

based on attributes found in the parent type.

Definition

# When applied to a type, augments the application with

# owner and group-based authorization rules.

directive @auth(rules: [AuthRule!]!) on OBJECT, FIELD_DEFINITION

input AuthRule {

allow: AuthStrategy!

provider: AuthProvider

ownerField: String # defaults to "owner" when using owner auth

identityClaim: String # defaults to "username" when using owner auth

groupClaim: String # defaults to "cognito:groups" when using Group auth

groups: [String] # Required when using Static Group auth

groupsField: String # defaults to "groups" when using Dynamic Group auth

operations: [ModelOperation] # Required for finer control

# The following arguments are deprecated. It is encouraged to use the 'operations' argument.

queries: [ModelQuery]

mutations: [ModelMutation]

}

enum AuthStrategy { owner groups private public }

enum AuthProvider { apiKey iam oidc userPools }

enum ModelOperation { create update delete read }

# The following objects are deprecated. It is encouraged to use ModelOperations.

enum ModelQuery { get list }

enum ModelMutation { create update delete }

Note: The operations argument was added to replace the ‘queries’ and ‘mutations’ arguments. The ‘queries’ and ‘mutations’ arguments will continue to work but it is encouraged to move to ‘operations’. If both are provided, the ‘operations’ argument takes precedence over ‘queries’.

Owner Authorization

# The simplest case

type Post @model @auth(rules: [{allow: owner}]) {

id: ID!

title: String!

}

# The long form way

type Post

@model

@auth(

rules: [

{allow: owner, ownerField: "owner", operations: [create, update, delete, read]},

])

{

id: ID!

title: String!

owner: String

}

Owner authorization specifies that a user can access an object. To do so, each object has an ownerField (by default “owner”) that stores ownership information and is verified in various ways during resolver execution.

You can use the operations argument to specify which operations are augmented as follows:

- read: If the record’s owner is not the same as the logged in user (via

$ctx.identity.username), throw$util.unauthorized()in any resolver that returns an object of this type. - create: Inject the logged in user’s

$ctx.identity.usernameas the ownerField automatically. - update: Add conditional update that checks the stored ownerField is the same as

$ctx.identity.username. - delete: Add conditional update that checks the stored ownerField is the same as

$ctx.identity.username.

Note: When specifying operations as a part of the @auth rule, the operations not included in the list are not protected by default. For example, let’s say you have the following schema:

type Todo @model

@auth(rules: [{ allow: owner, operations: [create, read] }]) {

id: ID!

updatedAt: AWSDateTime!

content: String!

}

In this schema, only the owner of the object has the authorization to perform read (getTodo and listTodos) operations on the owner created object. But this does not prevent any other owner (any user other than the creator or owner of the object) to update/delete some other owner’s object.

Here’s a truth table for the above-mentioned schema. In the table below other refers to any user other than the creator or owner of the object.

| getTodo | listTodos | createTodo | updateTodo | deleteTodo | |

|---|---|---|---|---|---|

| owner | ✅ | ✅ | ✅ | ✅ | ✅ |

| other | ❌ | ❌ | ✅ | ✅ | ✅ |

If you want to prevent updates and deletes operations, you would need to modify the @auth rule to explicitly include the update and delete operation and your schema should look like the following:

type Todo @model

@auth(rules: [{ allow: owner, operations: [create, read, update, delete] }]) {

id: ID!

updatedAt: AWSDateTime!

content: String!

}

Here’s a truth table for the above-mentioned schema. In the table below other refers to any user other than the creator or owner of the object.

| getTodo | listTodos | createTodo | updateTodo | deleteTodo | |

|---|---|---|---|---|---|

| owner | ✅ | ✅ | ✅ | ✅ | ✅ |

| other | ❌ | ❌ | ✅ | ❌ | ❌ |

Note: Specifying @auth(rules: [{ allow: owner, operations: [create]}]) still allows anyone who has access to your API to create records (as shown in the above truth table). However, including this is necessary when specifying other owner auth rules to ensure that the owner is stored with the record so it can be verified on subsequent requests.

You may also apply multiple ownership rules on a single @model type. For example, imagine you have a type Draft

that stores unfinished posts for a blog. You might want to allow the Draft’s owner to create, update, delete, and

read Draft objects. However, you might also want the Draft’s editors to be able to update and read Draft objects.

To allow for this use case you could use the following type definition:

type Draft @model

@auth(rules: [

# Defaults to use the "owner" field.

{ allow: owner },

# Authorize the update mutation and both queries. Use `queries: null` to disable auth for queries.

{ allow: owner, ownerField: "editors", operations: [update] }

]) {

id: ID!

title: String!

content: String

owner: String

editors: [String]

}

Ownership with create mutations

The ownership authorization rule tries to make itself as easy as possible to use. One feature that helps with this is that it will automatically fill ownership fields unless told explicitly not to do so. To show how this works, lets look at how the create mutation would work for the Draft type above:

mutation CreateDraft {

createDraft(input: { title: "A new draft" }) {

id

title

owner

editors

}

}

Let’s assume that when I call this mutation I am logged in as someuser@my-domain.com. The result would be:

{

"data": {

"createDraft": {

"id": "...",

"title": "A new draft",

"owner": "someuser@my-domain.com",

"editors": ["someuser@my-domain.com"]

}

}

}

The Mutation.createDraft resolver is smart enough to match your auth rules to attributes

and will fill them in be default. If you do not want the value to be automatically set all

you need to do is include a value for it in your input. For example, to have the resolver

automatically set the owner but not the editors, you would run this:

mutation CreateDraft {

createDraft(

input: {

title: "A new draft",

editors: []

}

) {

id

title

owner

editors

}

}

This would return:

{

"data": {

"createDraft": {

"id": "...",

"title": "A new draft",

"owner": "someuser@my-domain.com",

"editors": []

}

}

}

You can try to do the same to owner but this will throw an Unauthorized exception because you are no longer the owner of the object you are trying to create

mutation CreateDraft {

createDraft(

input: {

title: "A new draft",

editors: [],

owner: null

}

) {

id

title

owner

editors

}

}

To set the owner to null with the current schema, you would still need to be in the editors list:

mutation CreateDraft {

createDraft(

input: {

title: "A new draft",

editors: ["someuser@my-domain.com"],

owner: null

}

) {

id

title

owner

editors

}

}

Would return:

{

"data": {

"createDraft": {

"id": "...",

"title": "A new draft",

"owner": null,

"editors": ["someuser@my-domain.com"]

}

}

}

Static Group Authorization

Static group authorization allows you to protect @model types by restricting access

to a known set of groups. For example, you can allow all Admin users to create,

update, delete, get, and list Salary objects.

type Salary @model @auth(rules: [{allow: groups, groups: ["Admin"]}]) {

id: ID!

wage: Int

currency: String

}

When calling the GraphQL API, if the user credential (as specified by the resolver’s $ctx.identity) is not

enrolled in the Admin group, the operation will fail.

To enable advanced authorization use cases, you can layer auth rules to provide specialized functionality. To show how we might do that, let’s expand the Draft example we started in the Owner Authorization section above. When we last left off, a Draft object could be updated and read by both its owner and any of its editors and could be created and deleted only by its owner. Let’s change it so that now any member of the “Admin” group can also create, update, delete, and read a Draft object.

type Draft @model

@auth(rules: [

# Defaults to use the "owner" field.

{ allow: owner },

# Authorize the update mutation and both queries. Use `queries: null` to disable auth for queries.

{ allow: owner, ownerField: "editors", operations: [update] },

# Admin users can access any operation.

{ allow: groups, groups: ["Admin"] }

]) {

id: ID!

title: String!

content: String

owner: String

editors: [String]!

}

Dynamic Group Authorization

# Dynamic group authorization with multiple groups

type Post @model @auth(rules: [{allow: groups, groupsField: "groups"}]) {

id: ID!

title: String

groups: [String]

}

# Dynamic group authorization with a single group

type Post @model @auth(rules: [{allow: groups, groupsField: "group"}]) {

id: ID!

title: String

group: String

}

With dynamic group authorization, each record contains an attribute specifying

what groups should be able to access it. Use the groupsField argument to

specify which attribute in the underlying data store holds this group

information. To specify that a single group should have access, use a field of

type String. To specify that multiple groups should have access, use a field of

type [String].

Just as with the other auth rules, you can layer dynamic group rules on top of other rules. Let’s again expand the Draft example from the Owner Authorization and Static Group Authorization sections above. When we last left off editors could update and read objects, owners had full access, and members of the admin group had full access to Draft objects. Now we have a new requirement where each record should be able to specify an optional list of groups that can read the draft. This would allow you to share an individual document with an external team, for example.

type Draft @model

@auth(rules: [

# Defaults to use the "owner" field.

{ allow: owner },

# Authorize the update mutation and both queries. Use `queries: null` to disable auth for queries.

{ allow: owner, ownerField: "editors", operations: [update] },

# Admin users can access any operation.

{ allow: groups, groups: ["Admin"] }

# Each record may specify which groups may read them.

{ allow: groups, groupsField: "groupsCanAccess", operations: [read] }

]) {

id: ID!

title: String!

content: String

owner: String

editors: [String]!

groupsCanAccess: [String]!

}

With this setup, you could create an object that can be read by the “BizDev” group:

mutation CreateDraft {

createDraft(input: {

title: "A new draft",

editors: [],

groupsCanAccess: ["BizDev"]

}) {

id

groupsCanAccess

}

}

And another draft that can be read by the “Marketing” group:

mutation CreateDraft {

createDraft(input: {

title: "Another draft",

editors: [],

groupsCanAccess: ["Marketing"]

}) {

id

groupsCanAccess

}

}

public Authorization

# The simplest case

type Post @model @auth(rules: [{allow: public}]) {

id: ID!

title: String!

}

The public authorization specifies that everyone will be allowed to access the API, behind the scenes the API will be protected with an API Key. To be able to use public the API must have API Key configured.

# public authorization with provider override

type Post @model @auth(rules: [{allow: public, provider: iam}]) {

id: ID!

title: String!

}

The @auth directive allows the override of the default provider for a given authorization mode. In the sample above iam is specified as the provider which allows you to use an “UnAuthenticated Role” from Cognito Identity Pools for public access, instead of an API Key. When used in conjunction with amplify add auth the CLI generates scoped down IAM policies for the “UnAuthenticated” role automatically.

private Authorization

# The simplest case

type Post @model @auth(rules: [{allow: private}]) {

id: ID!

title: String!

}

The private authorization specifies that everyone will be allowed to access the API with a valid JWT token from the configured Cognito User Pool. To be able to use private the API must have Cognito User Pool configured.

# private authorization with provider override

type Post @model @auth(rules: [{allow: private, provider: iam}]) {

id: ID!

title: String!

}

The @auth directive allows the override of the default provider for a given authorization mode. In the sample above iam is specified as the provider which allows you to use an “Authenticated Role” from Cognito Identity Pools for private access. When used in conjunction with amplify add auth the CLI generates scoped down IAM policies for the “Authenticated” role automatically.

Authorization Using an oidc Provider

# private authorization with provider override

type Post @model @auth(rules: [{allow: private, provider: oidc}]) {

id: ID!

title: String!

}

# owner authorization with provider override

type Profile @model @auth(rules: [{allow: owner, provider: oidc, identityClaim: "sub"}]) {

id: ID!

displayNAme: String!

}

By using a configured oidc provider for the API, it is possible to authenticate the users against it to perform operations on the Post type, and owner authorization is also possible.

Combining Authorization Rules

The objects and fields in the GraphQL schema can have rules with different authorization providers assigned.

type Post @model

@auth (

rules: [

{ allow: owner },

{ allow: private, provider: iam, operations: [read] }

]

) {

id: ID!

title: String

owner: String

}

In the example above the model is protected by Cognito User Pools by default and the owner can perform any operation on the Post type, but a Lambda function through the configured IAM policies can only call the getPost and listPosts query.

type Post @model @auth (rules: [{ allow: private }]) {

id: ID!

title: String

owner: String

secret: String

@auth (rules: [{ allow: private, provider: iam, operations: [create, update] }])

}

In the example above the model is protected by Cognito User Pools by default and anyone with a valid JWT token can perform any operation on the Post type, but cannot update the secret field. The secret field can only be modified through the configured IAM policies, from a Lambda function for example.

Allowed Authorization Mode vs. Provider Combinations

The following table shows the allowed combinations of authorization modes and providers.

| owner | groups | public | private | |

|---|---|---|---|---|

| userPools | ✅ | ✅ | ✅ | |

| oidc | ✅ | |||

| apiKey | ✅ | |||

| iam | ✅ | ✅ |

Please note that groups is leveraging Cognito User Pools but no provider assignment needed/possible.

Custom Claims

@auth supports using custom claims if you do not wish to use the default username or cognito:groups claims from your JWT token which are populated by Amazon Cognito. This can be helpful if you are using tokens from a 3rd party OIDC system or if you wish to populate a claim with a list of groups from an external system, such as when using a Pre Token Generation Lambda Trigger which reads from a database. To use custom claims specify identityClaim or groupClaim as appropriate like in the example below:

type Post @model

@auth(rules: [

{allow: owner, identityClaim: "user_id"},

{allow: groups, groups: ["Moderator"], groupClaim: "user_groups"}

])

{

id: ID!

owner: String

postname: String

content: String

}

In this example the object owner will check against a user_id claim. Similarly if the user_groups claim contains a “Moderator” string then access will be granted.

Note identityField is being deprecated for identityClaim.

Authorizing Subscriptions

Prior to version 2.0 of the CLI, @auth rules did not apply to subscriptions. Instead you were required to either turn them off or use Custom Resolvers to manually add authorization checks. In the latest versions @auth protections have been added to subscriptions, however this can introduce different behavior into existing applications: First, owner is now a required argument for Owner-based authorization, as shown below. Second, the selection set will set null on fields when mutations are invoked if per-field @auth is set on that field. Read more here. If you wish to keep the previous behavior set level: public on your model as defined below.

When @auth is used subscriptions have a few subtle behavior differences than queries and mutations based on their event based nature. When protecting a model using the owner auth strategy, each subscription request will require that the user is passed as an argument to the subscription request. If the user field is not passed, the subscription connection will fail. In the case where it is passed, the user will only get notified of updates to records for which they are the owner.

Alternatively, when the model is protected using the static group auth strategy, the subscription request will only succeed if the user is in an allowed group. Further, the user will only get notifications of updates to records if they are in an allowed group. Note: You don’t need to pass the user as an argument in the subscription request, since the resolver will instead check the contents of your JWT token.

Dynamic groups have no impact to subscriptions. You will not get notified of any updates to them.

For example suppose you have the following schema:

type Post @model

@auth(rules: [{allow: owner}])

{

id: ID!

owner: String

postname: String

content: String

}

This means that the subscription must look like the following or it will fail:

subscription onCreatePost(owner: “Bob”){

postname

content

}

Note that if your type doesn’t already have an owner field the Transformer will automatically add this for you. Passing in the current user can be done dynamically in your code by using Auth.currentAuthenticatedUser() in JavaScript, AWSMobileClient.default().username in iOS, or AWSMobileClient.getInstance().getUsername() in Android.

In the case of groups if you define the following:

type Post @model

@auth(rules: [{allow: groups, groups: ["Admin"]}]) {

{

id: ID!

owner: String

postname: String

content: String

}

Then you don’t need to pass an argument, as the resolver will check the contents of your JWT token at subscription time and ensure you are in the “Admin” group.

Finally, if you use both owner and group authorization then the username argument becomes optional. This means the following:

- If you don’t pass the user in, but are a member of an allowed group, the subscription will notify you of records added.

- If you don’t pass the user in, but are NOT a member of an allowed group, the subscription will fail to connect.

- If you pass the user in who IS the owner but is NOT a member of a group, the subscription will notify you of records added of which you are the owner.

- If you pass the user in who is NOT the owner and is NOT a member of a group, the subscription will not notify you of anything as there are no records for which you own

You may disable authorization checks on subscriptions or completely turn off subscriptions as well by specifying either public or off in @model:

@model (subscriptions: { level: public })

Field Level Authorization

The @auth directive specifies that access to a specific field should be restricted

according to its own set of rules. Here are a few situations where this is useful:

Protect access to a field that has different permissions than the parent model

You might want to have a user model where some fields, like username, are a part of the public profile and the ssn field is visible to owners.

type User @model {

id: ID!

username: String

ssn: String @auth(rules: [{ allow: owner, ownerField: "username" }])

}

Protect access to a @connection resolver based on some attribute in the source object

This schema will protect access to Post objects connected to a user based on an attribute

in the User model. You may turn off top level queries by specifying queries: null in the @model

declaration which restricts access such that queries must go through the @connection resolvers

to reach the model.

type User @model {

id: ID!

username: String

posts: [Post]

@connection(name: "UserPosts")

@auth(rules: [{ allow: owner, ownerField: "username" }])

}

type Post @model(queries: null) { ... }

Protect mutations such that certain fields can have different access rules than the parent model

When used on field definitions, @auth directives protect all operations by default.

To protect read operations, a resolver is added to the protected field that implements authorization logic.

To protect mutation operations, logic is added to existing mutations that will be run if the mutation’s input

contains the protected field. For example, here is a model where owners and admins can read employee

salaries but only admins may create or update them.

type Employee @model {

id: ID!

email: String

# Owners & members of the "Admin" group may read employee salaries.

# Only members of the "Admin" group may create an employee with a salary

# or update a salary.

salary: String

@auth(rules: [

{ allow: owner, ownerField: "username", operations: [read] },

{ allow: groups, groups: ["Admin"], operations: [create, update, read] }

])

}

Note The delete operation, when used in @auth directives on field definitions, translates

to protecting the update mutation such that the field cannot be set to null unless authorized.

Note: When specifying operations as a part of the @auth rule on a field, the operations not included in the operations list are not protected by default. For example, let’s say you have the following schema:

type Todo

@model

{

id: ID!

updatedAt: AWSDateTime!

content: String! @auth(rules: [{ allow: owner, operations: [update] }])

}

In this schema, only the owner of the object has the authorization to perform update operations on the content field. But this does not prevent any other owner (any user other than the creator or owner of the object) to update some other field in the object owned by another user. If you want to prevent update operations on a field, the user would need to explicitly add auth rules to restrict access to that field. One of the ways would be to explicitly specify @auth rules on the fields that you would want to protect like the following:

type Todo

@model

{

id: ID!

updatedAt: AWSDateTime! @auth(rules: [{ allow: owner, operations: [update] }]) // or @auth(rules: [{ allow: groups, groups: ["Admins"] }])

content: String! @auth(rules: [{ allow: owner, operations: [update] }])

}

You can also provide explicit deny rules to your field like the following:

type Todo

@model

{

id: ID!

updatedAt: AWSDateTime! @auth(rules: [{ allow: groups, groups: ["ForbiddenGroup"] }])

content: String! @auth(rules: [{ allow: owner, operations: [update] }])

}

You can also combine top-level @auth rules on the type with field level auth rules. For example, let’s consider the following schema:

type Todo

@model @auth(rules: [{allow: groups, groups: ["Admin"], operations:[update] }]

{

id: ID!

updatedAt: AWSDateTime!

content: String! @auth(rules: [{ allow: owner, operations: [update] }])

}

In the above schema users in the Admin group have the authorization to create, read, delete and update (except the content field in the object of another owner) for the type Todo.

An owner of an object, has the authorization to create Todo types and read all the objects of type Todo. In addition an owner can perform an update operation on the Todo object, only when the content field is present as a part of the input.

Any other user - who isn’t an owner of an object isn’t authorized to update that object.

Per-Field with Subscriptions

When setting per-field @auth the Transformer will alter the response of mutations for those fields by setting them to null in order to prevent sensitive data from being sent over subscriptions. For example in the schema below:

type Employee @model

@auth(rules: [

{ allow: owner },

{ allow: groups, groups: ["Admins"] }

]) {

id: ID!

name: String!

address: String!

ssn: String @auth(rules: [{allow: owner}])

}

Subscribers might be a member of the “Admins” group and should get notified of the new item, however they should not get the ssn field. If you run the following mutation:

mutation {

createEmployee(input: {

name: "Nadia"

address: "123 First Ave"

ssn: "392-95-2716"

}){

title

content

ssn

}

}

The mutation will run successfully, however ssn will return null in the GraphQL response. This prevents anyone in the “Admins” group who is subscribed to updates from receiving the private information. Subscribers would still receive the name and address. The data is still written and this can be verified by running a query.

Generates

The @auth directive will add authorization snippets to any relevant resolver

mapping templates at compile time. Different operations use different methods

of authorization.

Owner Authorization

type Post @model @auth(rules: [{allow: owner}]) {

id: ID!

title: String!

}

The generated resolvers would be protected like so:

Mutation.createX: Verify the requesting user has a valid credential and automatically set the owner attribute to equal$ctx.identity.username.Mutation.updateX: Update the condition expression so that the DynamoDBUpdateItemoperation only succeeds if the record’s owner attribute equals the caller’s$ctx.identity.username.Mutation.deleteX: Update the condition expression so that the DynamoDBDeleteItemoperation only succeeds if the record’s owner attribute equals the caller’s$ctx.identity.username.Query.getX: In the response mapping template verify that the result’s owner attribute is the same as the$ctx.identity.username. If it is not return null.Query.listX: In the response mapping template filter the result’s items such that only items with an owner attribute that is the same as the$ctx.identity.usernameare returned.@connectionresolvers: In the response mapping template filter the result’s items such that only items with an owner attribute that is the same as the$ctx.identity.usernameare returned. This is not enabled when using thequeriesargument.

Static Group Authorization

type Post @model @auth(rules: [{allow: groups, groups: ["Admin"]}]) {

id: ID!

title: String!

groups: String

}

Static group auth is simpler than the others. The generated resolvers would be protected like so:

Mutation.createX: Verify the requesting user has a valid credential and that$ctx.identity.claims.get("cognito:groups")contains the Admin group. If it does not, fail.Mutation.updateX: Verify the requesting user has a valid credential and that$ctx.identity.claims.get("cognito:groups")contains the Admin group. If it does not, fail.Mutation.deleteX: Verify the requesting user has a valid credential and that$ctx.identity.claims.get("cognito:groups")contains the Admin group. If it does not, fail.Query.getX: Verify the requesting user has a valid credential and that$ctx.identity.claims.get("cognito:groups")contains the Admin group. If it does not, fail.Query.listX: Verify the requesting user has a valid credential and that$ctx.identity.claims.get("cognito:groups")contains the Admin group. If it does not, fail.@connectionresolvers: Verify the requesting user has a valid credential and that$ctx.identity.claims.get("cognito:groups")contains the Admin group. If it does not, fail. This is not enabled when using thequeriesargument.

Dynamic Group Authorization

type Post @model @auth(rules: [{allow: groups, groupsField: "groups"}]) {

id: ID!

title: String!

groups: String

}

The generated resolvers would be protected like so:

Mutation.createX: Verify the requesting user has a valid credential and that it contains a claim to at least one group passed to the query in the$ctx.args.input.groupsargument.Mutation.updateX: Update the condition expression so that the DynamoDBUpdateItemoperation only succeeds if the record’s groups attribute contains at least one of the caller’s claimed groups via$ctx.identity.claims.get("cognito:groups").Mutation.deleteX: Update the condition expression so that the DynamoDBDeleteItemoperation only succeeds if the record’s groups attribute contains at least one of the caller’s claimed groups via$ctx.identity.claims.get("cognito:groups")Query.getX: In the response mapping template verify that the result’s groups attribute contains at least one of the caller’s claimed groups via$ctx.identity.claims.get("cognito:groups").Query.listX: In the response mapping template filter the result’s items such that only items with a groups attribute that contains at least one of the caller’s claimed groups via$ctx.identity.claims.get("cognito:groups").@connectionresolver: In the response mapping template filter the result’s items such that only items with a groups attribute that contains at least one of the caller’s claimed groups via$ctx.identity.claims.get("cognito:groups"). This is not enabled when using thequeriesargument.

@function

The @function directive allows you to quickly & easily configure AWS Lambda resolvers within your AWS AppSync API.

Definition

directive @function(name: String!, region: String) on FIELD_DEFINITION

Usage

The @function directive allows you to quickly connect lambda resolvers to an AppSync API. You may deploy the AWS Lambda functions via the Amplify CLI, AWS Lambda console, or any other tool. To connect an AWS Lambda resolver, add the @function directive to a field in your schema.graphql.

Let’s assume we have deployed an echo function with the following contents:

exports.handler = function (event, context) {

context.done(null, event.arguments.msg);

};

If you deployed your function using the ‘amplify function’ category

The Amplify CLI provides support for maintaining multiple environments out of the box. When you deploy a function via amplify add function, it will automatically add the environment suffix to your Lambda function name. For example if you create a function named echofunction using amplify add function in the dev environment, the deployed function will be named echofunction-dev. The @function directive allows you to use ${env} to reference the current Amplify CLI environment.

type Query {

echo(msg: String): String @function(name: "echofunction-${env}")

}

If you deployed your function without amplify

If you deployed your API without amplify then you must provide the full Lambda function name. If we deployed the same function with the name echofunction then you would have:

type Query {

echo(msg: String): String @function(name: "echofunction")

}

Example: Return custom data and run custom logic

You can use the @function directive to write custom business logic in an AWS Lambda function. To get started, use

amplify add function, the AWS Lambda console, or other tool to deploy an AWS Lambda function with the following contents.

For example purposes assume the function is named GraphQLResolverFunction:

const POSTS = [

{ id: 1, title: "AWS Lambda: How To Guide." },

{ id: 2, title: "AWS Amplify Launches @function and @key directives." },

{ id: 3, title: "Serverless 101" }

];

const COMMENTS = [

{ postId: 1, content: "Great guide!" },

{ postId: 1, content: "Thanks for sharing!" },

{ postId: 2, content: "Can't wait to try them out!" }

];

// Get all posts. Write your own logic that reads from any data source.

function getPosts() {

return POSTS;

}

// Get the comments for a single post.

function getCommentsForPost(postId) {

return COMMENTS.filter(comment => comment.postId === postId);

}

/**

* Using this as the entry point, you can use a single function to handle many resolvers.

*/

const resolvers = {

Query: {

posts: ctx => {

return getPosts();

},

},

Post: {

comments: ctx => {

return getCommentsForPost(ctx.source.id);

},

},

}

// event

// {

// "typeName": "Query", /* Filled dynamically based on @function usage location */

// "fieldName": "me", /* Filled dynamically based on @function usage location */

// "arguments": { /* GraphQL field arguments via $ctx.arguments */ },

// "identity": { /* AppSync identity object via $ctx.identity */ },

// "source": { /* The object returned by the parent resolver. E.G. if resolving field 'Post.comments', the source is the Post object. */ },

// "request": { /* AppSync request object. Contains things like headers. */ },

// "prev": { /* If using the built-in pipeline resolver support, this contains the object returned by the previous function. */ },

// }

exports.handler = async (event) => {

const typeHandler = resolvers[event.typeName];

if (typeHandler) {

const resolver = typeHandler[event.fieldName];

if (resolver) {

return await resolver(event);

}

}

throw new Error("Resolver not found.");

};

Example: Get the logged in user from Amazon Cognito User Pools

When building applications, it is often useful to fetch information for the current user. We can use the @function directive to quickly add a resolver that uses AppSync identity information to fetch a user from Amazon Cognito User Pools. First make sure you have added Amazon Cognito User Pools enabled via amplify add auth and a GraphQL API via amplify add api to an amplify project. Once you have created the user pool, get the UserPoolId from amplify-meta.json in the backend/ directory of your amplify project. You will provide this value as an environment variable in a moment. Next, using the Amplify function category, AWS console, or other tool, deploy a AWS Lambda function with the following contents.

For example purposes assume the function is named GraphQLResolverFunction:

/* Amplify Params - DO NOT EDIT

You can access the following resource attributes as environment variables from your Lambda function

var environment = process.env.ENV

var region = process.env.REGION

var authMyResourceNameUserPoolId = process.env.AUTH_MYRESOURCENAME_USERPOOLID

Amplify Params - DO NOT EDIT */

const { CognitoIdentityServiceProvider } = require('aws-sdk');

const cognitoIdentityServiceProvider = new CognitoIdentityServiceProvider();

/**

* Get user pool information from environment variables.

*/

const COGNITO_USERPOOL_ID = process.env.AUTH_MYRESOURCENAME_USERPOOLID;

if (!COGNITO_USERPOOL_ID) {

throw new Error(`Function requires environment variable: 'COGNITO_USERPOOL_ID'`);

}

const COGNITO_USERNAME_CLAIM_KEY = 'cognito:username';

/**

* Using this as the entry point, you can use a single function to handle many resolvers.

*/

const resolvers = {

Query: {

echo: ctx => {

return ctx.arguments.msg;

},

me: async ctx => {

var params = {

UserPoolId: COGNITO_USERPOOL_ID, /* required */

Username: ctx.identity.claims[COGNITO_USERNAME_CLAIM_KEY], /* required */

};

try {

// Read more: https://docs.aws.amazon.com/AWSJavaScriptSDK/latest/AWS/CognitoIdentityServiceProvider.html#adminGetUser-property

return await cognitoIdentityServiceProvider.adminGetUser(params).promise();

} catch (e) {

throw new Error(`NOT FOUND`);

}

}

},

}

// event

// {

// "typeName": "Query", /* Filled dynamically based on @function usage location */

// "fieldName": "me", /* Filled dynamically based on @function usage location */

// "arguments": { /* GraphQL field arguments via $ctx.arguments */ },

// "identity": { /* AppSync identity object via $ctx.identity */ },

// "source": { /* The object returned by the parent resolver. E.G. if resolving field 'Post.comments', the source is the Post object. */ },

// "request": { /* AppSync request object. Contains things like headers. */ },

// "prev": { /* If using the built-in pipeline resolver support, this contains the object returned by the previous function. */ },

// }

exports.handler = async (event) => {

const typeHandler = resolvers[event.typeName];

if (typeHandler) {

const resolver = typeHandler[event.fieldName];

if (resolver) {

return await resolver(event);

}

}

throw new Error("Resolver not found.");

};

You can connect this function to your AppSync API deployed via Amplify using this schema:

type Query {

posts: [Post] @function(name: "GraphQLResolverFunction")

}

type Post {

id: ID!

title: String!

comments: [Comment] @function(name: "GraphQLResolverFunction")

}

type Comment {

postId: ID!

content: String

}

This simple lambda function shows how you can write your own custom logic using a language of your choosing. Try enhancing the example with your own data and logic.

When deploying the function, make sure your function has access to the auth resource. You can run the

amplify update functioncommand for the CLI to automatically supply an environment variable namedAUTH_<RESOURCE_NAME>_USERPOOLIDto the function and associate corresponding CRUD policies to the execution role of the function.

After deploying our function, we can connect it to AppSync by defining some types and using the @function directive. Add this to your schema, to connect the

Query.echo and Query.me resolvers to our new function.

type Query {

me: User @function(name: "GraphQLResolverFunction")

echo(msg: String): String @function(name: "GraphQLResolverFunction")

}

# These types derived from https://docs.aws.amazon.com/AWSJavaScriptSDK/latest/AWS/CognitoIdentityServiceProvider.html#adminGetUser-property

type User {

Username: String!

UserAttributes: [Value]

UserCreateDate: String

UserLastModifiedDate: String

Enabled: Boolean

UserStatus: UserStatus

MFAOptions: [MFAOption]

PreferredMfaSetting: String

UserMFASettingList: String

}

type Value {

Name: String!

Value: String

}

type MFAOption {

DeliveryMedium: String

AttributeName: String

}

enum UserStatus {

UNCONFIRMED

CONFIRMED

ARCHIVED

COMPROMISED

UNKNOWN

RESET_REQUIRED

FORCE_CHANGE_PASSWORD

}

Next run amplify push and wait as your project finishes deploying. To test that everything is working as expected run amplify api console to open the GraphiQL editor for your API. You are going to need to open the Amazon Cognito User Pools console to create a user if you do not yet have any. Once you have created a user go back to the AppSync console’s query page and click “Login with User Pools”. You can find the ClientId in amplify-meta.json under the key AppClientIDWeb. Paste that value into the modal and login using your username and password. You can now run this query:

query {

me {

Username

UserStatus

UserCreateDate

UserAttributes {

Name

Value

}

MFAOptions {

AttributeName

DeliveryMedium

}

Enabled

PreferredMfaSetting

UserMFASettingList

UserLastModifiedDate

}

}

which will return user information related to the current user directly from your user pool.

Structure of the AWS Lambda function event

When writing lambda function’s that are connected via the @function directive, you can expect the following structure for the AWS Lambda event object.

| Key | Description |

|---|---|

| typeName | The name of the parent object type of the field being resolver. |

| fieldName | The name of the field being resolved. |

| arguments | A map containing the arguments passed to the field being resolved. |

| identity | A map containing identity information for the request. Contains a nested key ‘claims’ that will contains the JWT claims if they exist. |

| source | When resolving a nested field in a query, the source contains parent value at runtime. For example when resolving Post.comments, the source will be the Post object. |

| request | The AppSync request object. Contains header information. |

| prev | When using pipeline resolvers, this contains the object returned by the previous function. You can return the previous value for auditing use cases. |

Calling functions in different regions

By default, we expect the function to be in the same region as the amplify project. If you need to call a function in a different (or static) region, you can provide the region argument.

type Query {

echo(msg: String): String @function(name: "echofunction", region: "us-east-1")

}

Calling functions in different AWS accounts is not supported via the @function directive but is supported by AWS AppSync.

Chaining functions

The @function directive supports AWS AppSync pipeline resolvers. That means, you can chain together multiple functions such that they are invoked in series when your field’s resolver is invoked. To create a pipeline resolver that calls out to multiple AWS Lambda functions in series, use multiple @function directives on the field.

type Mutation {

doSomeWork(msg: String): String @function(name: "worker-function") @function(name: "audit-function")

}

In the example above when you run a mutation that calls the Mutation.doSomeWork field, the worker-function will be invoked first then the audit-function will be invoked with an event that contains the results of the worker-function under the event.prev.result key. The audit-function would need to return event.prev.result if you want the result of worker-function to be returned for the field. Under the hood, Amplify creates an AppSync::FunctionConfiguration for each unique instance of @function in a document and a pipeline resolver containing a pointer to a function for each @function on a given field.

Generates

The @function directive generates these resources as necessary:

- An AWS IAM role that has permission to invoke the function as well as a trust policy with AWS AppSync.

- An AWS AppSync data source that registers the new role and existing function with your AppSync API.

- An AWS AppSync pipeline function that prepares the lambda event and invokes the new data source.

- An AWS AppSync resolver that attaches to the GraphQL field and invokes the new pipeline functions.

@connection

The @connection directive enables you to specify relationships between @model types. Currently, this supports one-to-one, one-to-many, and many-to-one relationships. You may implement many-to-many relationships using two one-to-many connections and a joining @model type. See the usage section for details.

We also provide a fully working schema with 17 patterns related to relational designs.

Definition

directive @connection(keyName: String, fields: [String!]) on FIELD_DEFINITION

Usage

Relationships between types are specified by annotating fields on an @model object type with the @connection directive.

The fields argument can be provided and indicates which fields can be queried by to get connected objects. The keyName argument can optionally be used to specify the name of secondary index (an index that was set up using @key) that should be queried from the other type in the relationship.

When specifying a keyName, the fields argument should be provided to indicate which field(s) will be used to get connected objects. If keyName is not provided, then @connection queries the target table’s primary index.

Has One

In the simplest case, you can define a one-to-one connection where a project has one team:

type Project @model {

id: ID!

name: String

team: Team @connection

}

type Team @model {

id: ID!

name: String!

}

You can also define the field you would like to use for the connection by populating the first argument to the fields array and matching it to a field on the type:

type Project @model {

id: ID!

name: String

teamID: ID!

team: Team @connection(fields: ["teamID"])

}

type Team @model {

id: ID!

name: String!

}

In this case, the Project type has a teamID field added as an identifier for the team that the project belongs to. @connection can then get the connected Team object by querying the Team table with this teamID.

After it’s transformed, you can create projects and query the connected team as follows:

mutation CreateProject {

createProject(input: { name: "New Project", teamID: "a-team-id"}) {

id

name

team {

id

name

}

}

}

Note The Project.team resolver is configured to work with the defined connection. This is done with a query on the Team table where

teamIDis passed in as an argument to the mutation.

Likewise, you can make a simple one-to-many connection as follows for a post that has many comments:

Has Many

type Post @model {

id: ID!

title: String!

comments: [Comment] @connection(keyName: "byPost", fields: ["id"])

}

type Comment @model

@key(name: "byPost", fields: ["postID", "content"]) {

id: ID!

postID: ID!

content: String!

}

Note how a one-to-many connection needs a @key that allows comments to be queried by the postID and the connection uses this key to get all comments whose postID is the id of the post was called on. After it’s transformed, you can create comments and query the connected Post as follows:

mutation CreatePost {

createPost(input: { id: "a-post-id", title: "Post Title" } ) {

id

title

}

}

mutation CreateCommentOnPost {

createComment(input: { id: "a-comment-id", content: "A comment", postID: "a-post-id"}) {

id

content

}

}

And you can query a Post with its comments as follows:

query getPost {

getPost(id: "a-post-id") {

id

title

comments {

items {

id

content

}

}

}

}

Belongs To

You can make a connection bi-directional by adding a many-to-one connection to types that already have a one-to-many connection. In this case we add a connection from Comment to Post since each comment belongs to a post:

type Post @model {

id: ID!

title: String!

comments: [Comment] @connection(keyName: "byPost", fields: ["id"])

}

type Comment @model

@key(name: "byPost", fields: ["postID", "content"]) {

id: ID!

postID: ID!

content: String!

post: Post @connection(fields: ["postID"])

}

After it’s transformed, you can create comments with a post as follows:

mutation CreatePost {

createPost(input: { id: "a-post-id", title: "Post Title" } ) {

id

title

}

}

mutation CreateCommentOnPost1 {

createComment(input: { id: "a-comment-id-1", content: "A comment #1", postID: "a-post-id"}) {

id

content

}

}

mutation CreateCommentOnPost2 {

createComment(input: { id: "a-comment-id-2", content: "A comment #2", postID: "a-post-id"}) {

id

content

}

}

And you can query a Comment with its Post, then all Comments of that Post by navigating the connection:

query GetCommentWithPostAndComments {

getComment( id: "a-comment-id-1" ) {

id

content

post {

id

title

comments {

items {

id

content

}

}

}

}

}

Many-To-Many Connections

You can implement many to many using two 1-M @connections, an @key, and a joining @model. For example:

type Post @model {

id: ID!

title: String!

editors: [PostEditor] @connection(keyName: "byPost", fields: ["id"])

}

# Create a join model and disable queries as you don't need them

# and can query through Post.editors and User.posts

type PostEditor

@model(queries: null)

@key(name: "byPost", fields: ["postID", "editorID"])

@key(name: "byEditor", fields: ["editorID", "postID"]) {

id: ID!

postID: ID!

editorID: ID!

post: Post! @connection(fields: ["postID"])

editor: User! @connection(fields: ["editorID"])

}

type User @model {

id: ID!

username: String!

posts: [PostEditor] @connection(keyName: "byEditor", fields: ["id"])

}

This case is a bidirectional many-to-many which is why two @key calls are needed on the PostEditor model.

You can first create a Post and a User, and then add a connection between them with by creating a PostEditor object as follows:

mutation CreateData {

p1: createPost(input: { id: "P1", title: "Post 1" }) {

id

}

p2: createPost(input: { id: "P2", title: "Post 2" }) {

id

}

u1: createUser(input: { id: "U1", username: "user1" }) {

id

}

u2: createUser(input: { id: "U2", username: "user2" }) {

id

}

}

mutation CreateLinks {

p1u1: createPostEditor(input: { id: "P1U1", postID: "P1", editorID: "U1" }) {

id

}

p1u2: createPostEditor(input: { id: "P1U2", postID: "P1", editorID: "U2" }) {

id

}

p2u1: createPostEditor(input: { id: "P2U1", postID: "P2", editorID: "U1" }) {

id

}

}

Note that neither the User type nor the Post type have any identifiers of connected objects. The connection info is held entirely inside the PostEditor objects.

You can query a given user with their posts:

query GetUserWithPosts {

getUser(id: "U1") {

id

username

posts {

items {

post {

title

}

}

}

}

}

Also you can query a given post with the editors of that post and can list the posts of those editors, all in a single query:

query GetPostWithEditorsWithPosts {

getPost(id: "P1") {

id

title

editors {

items {

editor {

username

posts {

items {

post {

title

}

}

}

}

}

}

}

}

Alternative Definition

The above definition is the recommended way to create relationships between model types in your API. This involves defining index structures using @key and connection resolvers using @connection. There is an older parameterization of @connection that creates indices and connection resolvers that is still functional for backwards compatibility reasons. It is recommended to use @key and the new @connection via the fields argument.

directive @connection(name: String, keyField: String, sortField: String, limit: Int) on FIELD_DEFINITION

This parameterization is not compatible with @key. See the parameterization above to use @connection with indexes created by @key.

Usage

Relationships between data are specified by annotating fields on an @model object type with the @connection directive. You can use the keyField to specify what field should be used to partition the elements within the index and the sortField argument to specify how the records should be sorted.

Unnamed Connections

In the simplest case, you can define a one-to-one connection:

type Project @model {

id: ID!

name: String

team: Team @connection

}

type Team @model {

id: ID!

name: String!

}

After it’s transformed, you can create projects with a team as follows:

mutation CreateProject {

createProject(input: { name: "New Project", projectTeamId: "a-team-id"}) {

id

name

team {

id

name

}

}

}

Note The Project.team resolver is configured to work with the defined connection.

Likewise, you can make a simple one-to-many connection as follows:

type Post @model {

id: ID!

title: String!

comments: [Comment] @connection

}

type Comment @model {

id: ID!

content: String!

}

After it’s transformed, you can create comments with a post as follows:

mutation CreateCommentOnPost {

createComment(input: { content: "A comment", postCommentsId: "a-post-id"}) {

id

content

}

}

Note The postCommentsId field on the input may seem unusual. In the one-to-many case without a provided

nameargument there is only partial information to work with, which results in the unusual name. To fix this, provide a value for the@connection’s name argument and complete the bi-directional relationship by adding a corresponding@connectionfield to the Comment type.

Named Connections

The name argument specifies a name for the connection and it’s used to create bi-directional relationships that reference the same underlying foreign key.

For example, if you wanted your Post.comments

and Comment.post fields to refer to opposite sides of the same relationship,

you need to provide a name.

type Post @model {

id: ID!

title: String!

comments: [Comment] @connection(name: "PostComments", sortField: "createdAt")

}

type Comment @model {

id: ID!

content: String!

post: Post @connection(name: "PostComments", sortField: "createdAt")

createdAt: String

}

After it’s transformed, create comments with a post as follows:

mutation CreateCommentOnPost {

createComment(input: { content: "A comment", commentPostId: "a-post-id"}) {

id

content

post {

id

title

comments {

id

# and so on...

}

}

}

}

When you query the connection, the comments will return sorted by their createdAt field.

query GetPostAndComments {

getPost(id: "...") {

id

title

comments {

items {

content

createdAt

}

}

}

}

Many-To-Many Connections

You can implement many to many using two 1-M @connections, an @key, and a joining @model. For example:

type Post @model {

id: ID!

title: String!

editors: [PostEditor] @connection(name: "PostEditors")

}

# Create a join model and disable queries as you don't need them

# and can query through Post.editors and User.posts

type PostEditor @model(queries: null) {

id: ID!

post: Post! @connection(name: "PostEditors")

editor: User! @connection(name: "UserEditors")

}

type User @model {

id: ID!

username: String!

posts: [PostEditor] @connection(name: "UserEditors")

}

You can then create Posts & Users independently and join them in a many-to-many by creating PostEditor objects.

Limit

The default number of nested objects is 10. You can override this behavior by setting the limit argument. For example:

type Post @model {

id: ID!

title: String!

comments: [Comment] @connection(limit: 50)

}

type Comment @model {

id: ID!

content: String!

}

Generates

In order to keep connection queries fast and efficient, the GraphQL transform manages global secondary indexes (GSIs) on the generated tables on your behalf. In the future we are investigating using adjacency lists along side GSIs for different use cases that are connection heavy.

Note After you have pushed a

@connectiondirective you should not try to change it. If you try to change it, the DynamoDB UpdateTable operation will fail. Should you need to change a@connection, you should add a new@connectionthat implements the new access pattern, update your application to use the new@connection, and then delete the old@connectionwhen it’s no longer needed.

@versioned

The @versioned directive adds object versioning and conflict resolution to a type. Do not use this directive when leveraging DataStore as the conflict detection and resolution features are automatically handled inside AppSync and are incompatible with the @versioned directive.

Definition

directive @versioned(versionField: String = "version", versionInput: String = "expectedVersion") on OBJECT

Usage

Add @versioned to a type that is also annotate with @model to enable object versioning and conflict detection for a type.

type Post @model @versioned {

id: ID!

title: String!

version: Int! # <- If not provided, it is added for you.

}

Creating a Post automatically sets the version to 1

mutation Create {

createPost(input:{

title:"Conflict detection in the cloud!"

}) {

id

title

version # will be 1

}

}

Updating a Post requires passing the “expectedVersion” which is the object’s last saved version